URL

https://arxiv.org/pdf/1903.06586.pdf

TL;DR

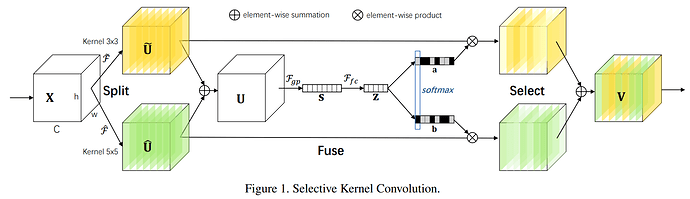

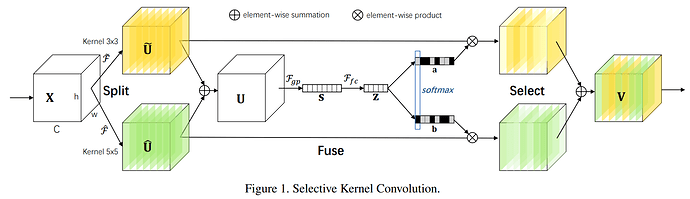

- SKNet 给 N 个不同感受野分支的 feature 通道赋予权重,结合了

Attention to channel 和 select kernel

SKNet网络结构

数学表达

X∈RH′×W′×C′⟶F~U~∈RH×W×C

X∈RH′×W′×C′⟶F^U^∈RH×W×C

U=U~+U^

sc=Fgp(Uc)=H×W1∑i=1H∑j=1WUc(i,j)

z=Ffc(s)=δ(β(Ws)), W∈Rd×C, d=max(rC,L)

ac=eAcz+eBczeAcz, bc=eAcz+eBczeBcz, Ac,Bc∈R1×d

Vc=ac.U~c+bc.U^c, Vc∈RH×W

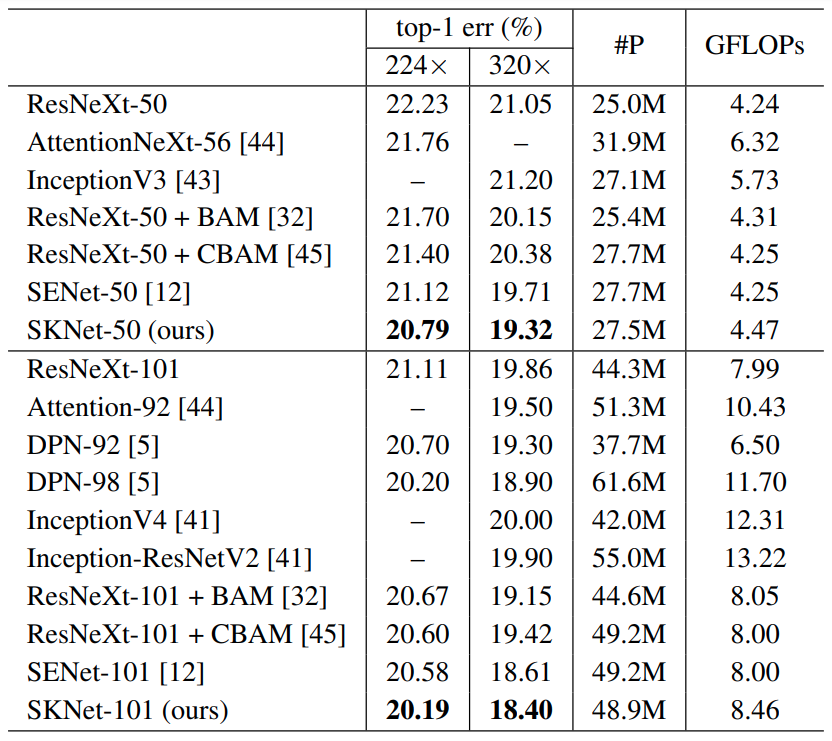

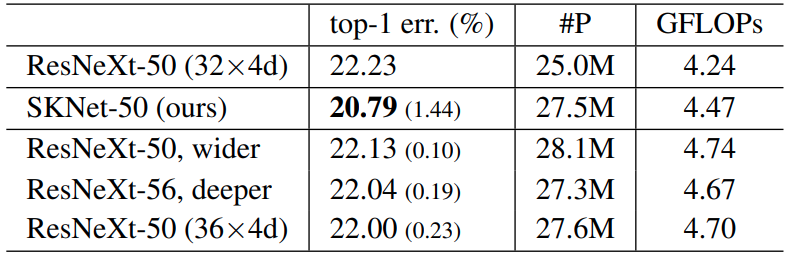

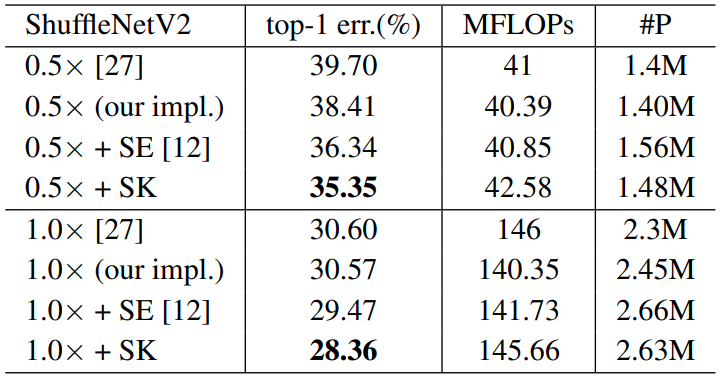

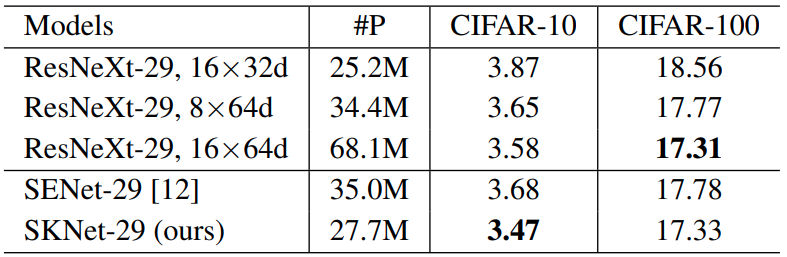

SKNet实验结果

Thoughts

SENet 与 SKNet 属于 Attention to channel,ULSAM 属于 Attention to HW,两个合起来是否可以替代 Non-local——在 THW上的 Attention