URL

https://arxiv.org/pdf/1606.06160.pdf

TL;DR

- DoReFa-Net是一种神经网络量化方法,包括对weights、activations和gradients进行量化

- 量化模型的位宽重要性:gradient_bits > activation_bits > weight_bits

Algorithm

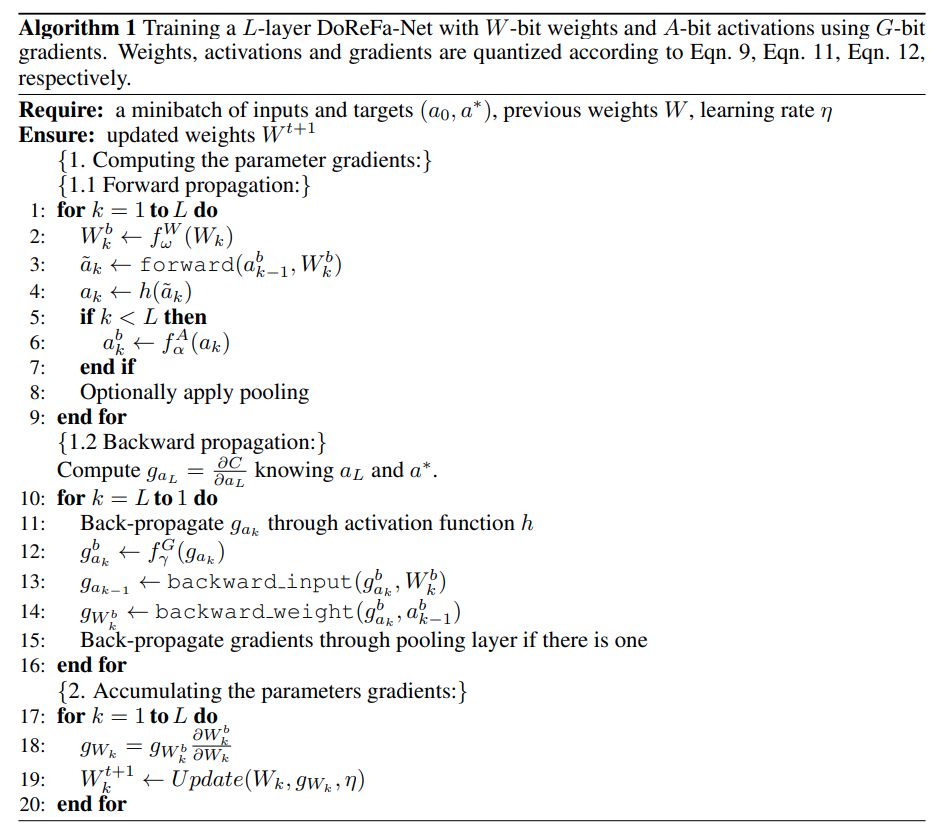

算法与实现角度理解:

总体流程

quantization of weights (v1) (上图 )

-

STE (straight-through estimator)

一种对本身不可微函数(例如四舍五入函数round()、符号函数sign()等)的手动指定微分的方法,量化网络离不开STE -

当1 == w_bits时:

其中 表示weight每层通道的绝对值均值 -

当1 < w_bits 时:

其中 quantize_k()函数是一个量化函数,使用类似四舍五入的方法,将 之间离散的数聚类到-

quantize_k():

-

-

quantization of weights 源码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36def uniform_quantize(k):

class qfn(torch.autograd.Function):

def forward(ctx, input):

if k == 32:

out = input

elif k == 1:

out = torch.sign(input)

else:

n = float(2 ** k - 1)

out = torch.round(input * n) / n

return out

def backward(ctx, grad_output):

# STE (do nothing in backward)

grad_input = grad_output.clone()

return grad_input

return qfn().apply

class weight_quantize_fn(nn.Module):

def __init__(self, w_bit):

super(weight_quantize_fn, self).__init__()

assert w_bit <= 8 or w_bit == 32

self.w_bit = w_bit

self.uniform_q = uniform_quantize(k=w_bit)

def forward(self, x):

if self.w_bit == 32:

weight_q = x

elif self.w_bit == 1:

E = torch.mean(torch.abs(x)).detach()

weight_q = self.uniform_q(x / E) * E

else:

weight = torch.tanh(x)

max_w = torch.max(torch.abs(weight)).detach()

weight = weight / 2 / max_w + 0.5

weight_q = max_w * (2 * self.uniform_q(weight) - 1)

return weight_q

quantization of activations (上图 )

- 对每层输出量化

quantization of gradients (上图 )

- 对梯度量化

其中: 表示backward上游回传的梯度, 表示 gradient_bits, 表示随机均匀噪声 - 由于模型端上inference阶段不需要梯度信息,而大多数模型也不会在端上训练,再加上低比特梯度训练会影响模型精度,所以对梯度的量化大多数情况下并不会使用

其他方面

- 由于最后一层的输出分布与模型中输出的分布不同,所以为了保证精度,不对模型输出层output做量化(上图step 5, 6)

- 由于模型第一层的输入与中间层输入分布不同,而且输入通道数较小,weight不量化代价小,所以 第一层的weight做量化

- 使用多步融合,不保存中间结果会降低模型运行是所需内存