URL

http://openaccess.thecvf.com/content_cvpr_2018/papers/Wang_Two-Step_Quantization_for_CVPR_2018_paper.pdf

TL;DR

- 对于 weight 的量化与对 activation 的量化如果同时学习,模型收敛比较困难,所以分成 code learning 和 transformation function learning 两个过程进行

- code learning :先保持 weight 全精度,量化 activation

- transformation function learning:量化 weight,学习 Al−1→Al 的映射

- 最终结果:2-bits activations + 3 值 weights 的 TSQ 只比官方全精度模型准确率低0.5个百分点

Algorithm

传统量化网络

-

优化过程

minimize{Wl} L(ZL,y)

subject to W^l=QW(Wl)

Zl^=W^lA^l−1

Al=ψ(Zl)

A^l=Qn(A), forl=1,2,...,L

-

难收敛的原因

- 由于对 QW() , μ∂W∂L 难以直接更新到 W^ ,导致 W 更新缓慢

- QA() 的 STE 会引起梯度高方差

Two-Step Quantization (TSQ)

- step 1:code learning

基于 HWGQ,不同点是:

- weights 保持全精度

- 引入超参数:稀疏阈值 ϵ≥0 ,开源代码中 ϵ=0.32,δ=0.6487

Qϵ(x)={qi′0x∈(ti′,ti+1′]x≤ϵ

- 引入超参数的目的是使得网络更关注于 high activations

- step 2:transformation function learning

我对这个步骤的理解是:使用全精度weights网络蒸馏low-bits weights网络

Λ,W^minimize∥∥∥∥Y−Qϵ(ΛW^X)∥∥∥∥F2=minimize{αi},{w^iT}i∑∥∥∥yiT−Qϵ(αiw^iTX)∥∥∥22

其中: αi 表示每个卷积核的缩放因子,X与 Y 分别表示 A^l−1 与 A^l ,用全精度weights得到的量化activations网络蒸馏量化weights量化activations网络

引入辅助变量 z 对 transformation function learning 进行分解:

α,w,zminimize∥y−Qϵ(z)∥22+λ∥∥∥z−αXTw^∥∥∥22

- Solving α and w^ with z fixed:

α,w^minimizeJ(α,w^)=∥∥∥z−αXTw^∥∥∥22

J(α,w^)=zTz−2αzTXTw^+α2w^TXXTw^

α∗=w^TXXTw^zTXTw^

w^∗=w^argmaxw^TXXTw^(zTXTw^)2

- Solving z with α and w^ fixed:

ziminimize(yi−Qϵ(zi))2+λ(zi−vi)2

czi(0)=min(0,vi)

zi(1)=min(M,max(0,1+λλvi+yi)

zi(2)=max(M,vi)

-

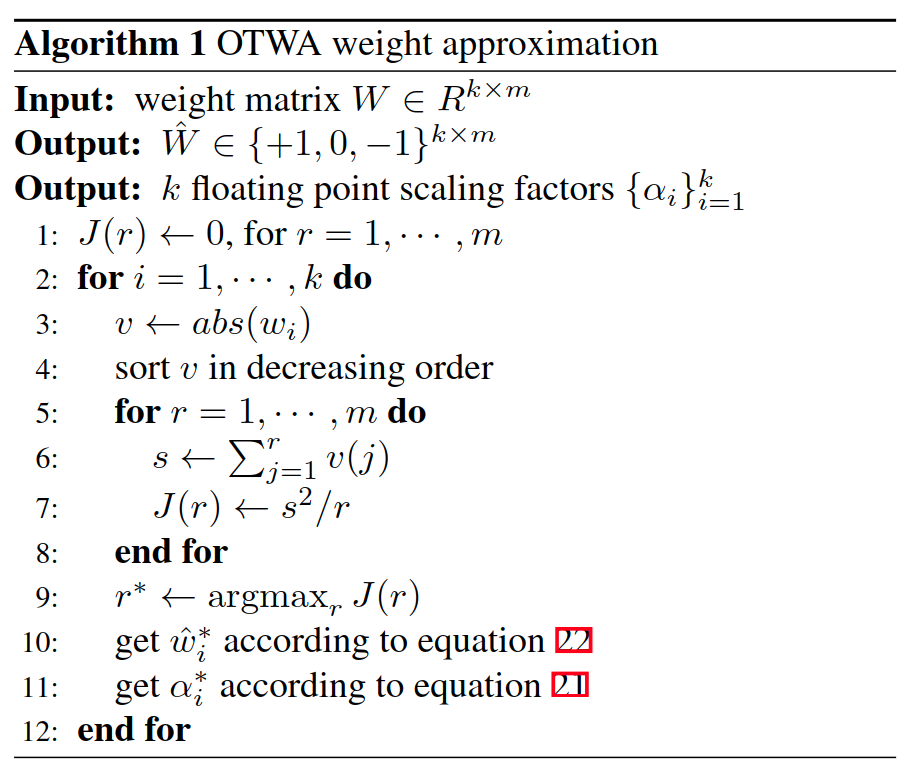

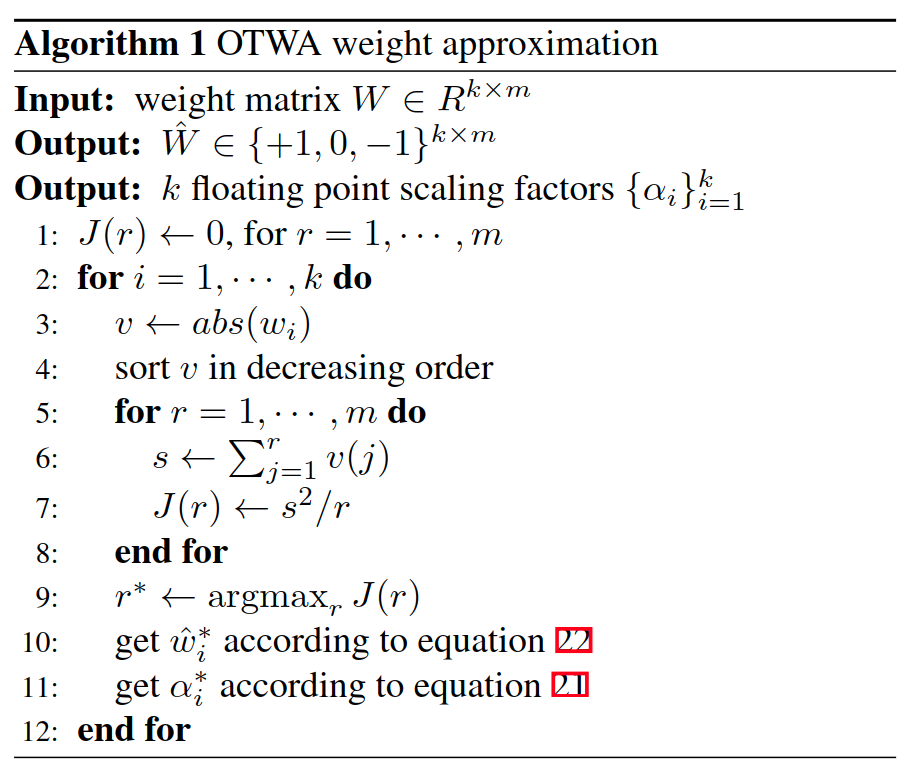

使用 Optimal TernaryWeights Approximation (OTWA) 初始化 α 和 W^

minα,w^ ∥w−αw^∥22 subject to α>0, w^∈{−1,0,+1}m

α∗=w^Tw^wTw^

w^∗=w^argmaxw^Tw^(wTw^)2

w^j={sign(wj)0abs(wj) in top r of abs(w) others

-

α 与w^ 初始值的计算过程 (OTWA)

Thoughts

- 对 weights 的量化与 activations 的量化拆分是一个容易想到的简化量化问题的方法

- 把对 weights 的量化转换成一种自蒸馏的方法,与 量化位宽 decay 有相似之处